Game Composer Guy Whitmore Interviewed

Veteran game composer, Guy Whitmore has been in the industry for more than 20 years. He has specialized in adaptive music and is the author of notable game scores like Die Hard: Nakatomi Plaza, Shivers, and Shogo.

—

June 21, 2017

1) Tell us a bit how you started your career as a game composer and how you ended up in the video game industry.

I began studying classical guitar at Northwestern University. I did a little bit of composition there and had one computer music course, but I didn’t think the composition would be my focus until graduate school when I started a program on guitar. In the middle of it, I began taking writing lessons and electroacoustic music courses and realized composition was the way I should go. I focused on music for theater and commercials at the beginning, but then a friend called me and told me there was a composition job at a company calledSierra On-Line, and I said “Oh really! I haven’t thought about video games”. But then I realized this job would match-up pretty well with what I was composing, so I applied and – short version of the story – I had an interview and got the job. Then, I did some freelance work, worked at Monolith Productions, did some more freelance job, went to work for Microsoft as an audio director for six years and now I’ve been working at PopCap for another six years. So, that’s 20+ years now!

2) Why did you choose to focus on adaptive music?

Adaptive music happened to me as I was doing my first couple of game projects. It was evident that video games are a non-linear medium. So, even before I knew what the word ‘adaptive’ meant regarding music, I naturally started gravitating towards non-linear concepts. This was in the PC CD-ROM days. I was just taking little music clips, one-phrase long each, and I took them to my programmers and said “Here… Call these randomly and put a random amount of space between them”. That was an initial idea, not knowing what other games were doing, but thinking what would work well in a nonlinear context.

My focus on adaptive music organically emerged as I was finding out the needs of these games. Since I was audio director and composer, I wasn’t on the outside thinking “Oh! Here’s some music”. I was on the inside and thought “We need something that helps these moments emotionally.” Of course, that’s going to require something that is flexible and dynamic. That’s how it started.

When I went to Monolith, someone said “The stuff you’re doing reminds me of Direct Music. You should take a look at that!”. That’s when I started using software to augment my compositional abilities, by relying on some features like variations and transitions. This took my (adaptive) music to all another level. When I started making adaptive music in the late 1990s, there wasn’t a lot going on. So, I became known for dynamic music. That was great for my career, as there weren’t a lot of people doing it and when a game needed adaptive music they would typically call me! I expected a rush of other composers to jump into this realm, but that wasn’t the case. It’s been slower than I thought it would be!

3) Can you tell us a few examples of your favorite adaptive video game soundtracks from your repertoire?

One of the first games I did at Monolith Productions was called Shogo: Mobile Armor Division. It was the first in which I used DirectMusic. In this game, I explored the notion of various emotional intensities with a fixed transition matrix. The soundtrack had a pretty basic set, in that there were three levels of intensity plus silence. So, four emotional states or intensities overall. There was a matrix of transitions that could go from any one of those states to any of the others. I also had to figure out what boundaries worked well with these transitions.

Sometimes, the barrier could be 4-measure long or 8-measure long. When a call was made in the game, we needed action. At those moments, I couldn’t wait to the end of the 4-bar phrase. I had to transition pretty much at the next bar boundary, sometimes the next beat edge. In Shogo, as well as in other action games, you often have to step it up and crank up to the action immediately. Therefore, I had to create transitions that would quickly move out of, let’s say, an ambient type of music to an action tune. We would monitor the AI of the enemies, and the number of enemies left. During a fight, when the enemies went down to a certain number we would bring the music back to a quieter emotional state. By doing this, we were following the ebbs and flows of the action on the screen.

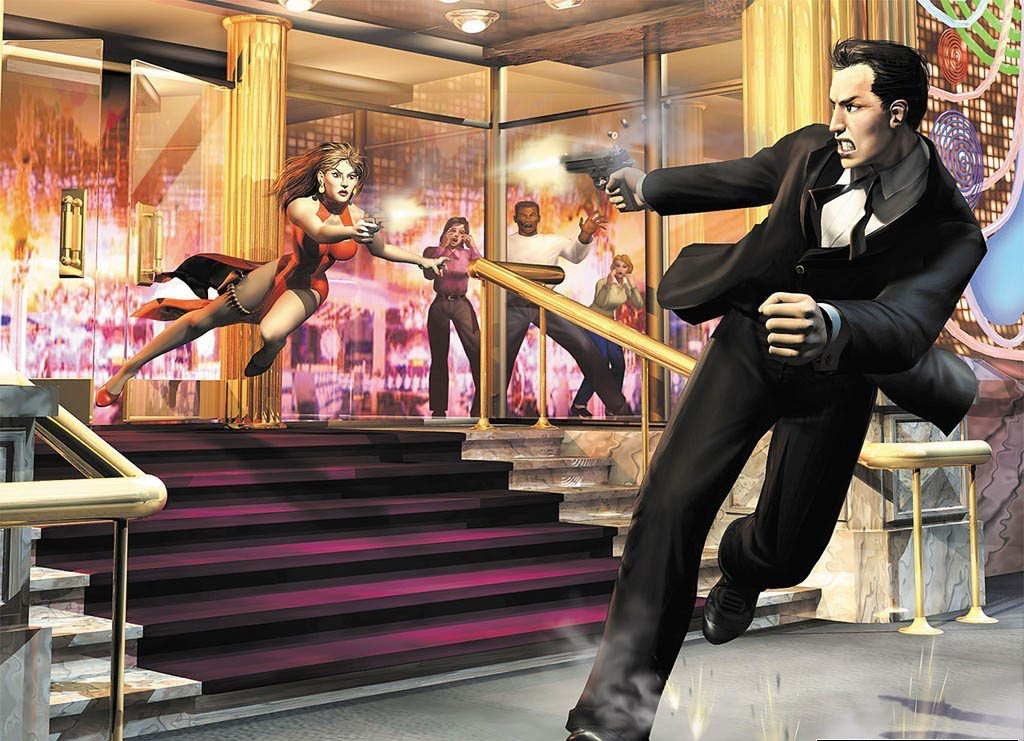

Another favorite score of mine is The Operative: No One Lives Forever, which is another Monolith game a couple of years after Shogo. No One Lives Forever is a first-person shooter in a spy genre. For this game, I had six moods or intensities with a transition matrix. That’s when I realized that by adding a couple of attitudes more, the transition matrix opens up by a factor! Six intensities made for a lot of work. So, I had a couple of other assistant composers to work through all these pieces and transitions. We did a lot more of variation work for this game. The score used MIDI and sample banks. This made creating variations helpful, as we could manually create one theme and then copy paste and change it quickly. It was all very manual, but we were able to create a lot of variations. What we found was that just by having four or five variations per instrument all of a sudden the factor of combination among all the tools for the score is in the thousands. We found that it wasn’t that hard to make these organic sounding variations even manually. That was a big revelation!

4) What are the challenges of having an adaptive soundtrack in a video game?

Over the history that I’ve been in composing for games, there has been a trade-off between having adaptive music and high fidelity. The common idea was that If you’re going to make an adaptive soundtrack, you have to sacrifice the quality of the actual sound of the instruments. If I’m going to make something that’s interactive maybe I have to go with MIDI and sample banks or with small chopped up Wav files that can never sound like a mastered end product from theLondon Symphony Orchestra. So, there initially was this trade-off between fidelity and reactivity given that adaptive music works ideally well on a note-based level. I’ve always thought this was a fair compromise, though. For me, making the music dynamic was worth it. I hit a threshold of fidelity that matched the visual fidelity of the game in that era of the late 1990s. I would never put an orchestral score for Pac-Man, it just doesn’t match the visual technology. But now we’re at a point in technology where you can have your live sounding orchestra and an adaptive score.

One of my better-known scores recently is Peggle 2. In this score, I used live recorded instruments. I went to a studio in Seattle and recorded the full orchestra, but section by section and phrase by phrase individually. So, we even did some single notes recording because I needed some pizzicato notes, but most of the work was done in little phrases. I built myself a mosaic of phrases and parts and bits that were reassembled by the music system and which evolves as you play the game. The score sounds ‘live’ because it uses live instruments. So, one of the challenges with adaptive music going forward is finding a way to have live sounding instruments with adaptability. It’s a lot of work, but even now, it’s possible.

5) What are the advantages of adaptive music for video games?

To me, more than an advantage I think that an adaptive score is mandatory in games. If it’s just linear files in a game, the larger those chunks are, the less they move with the visuals in the game, the more disconnect is going to be from experience. Also, the more the likelihood the player would say “I’m just going to play something from my jukebox!”. If all one’s getting is a 5-minute piece that loops then nothing is interesting there. So for me, being adaptive is a starting point. There has to be some degree of adaptability in the music which works with the level of versatility of the game. To me, adaptive scoring is the scoring of the game. Without adaptability, you’re just throwing music libraries into the game without attachment to the game.

6) Is it difficult for a game company to integrate an adaptive music solution in their games?

Yes, I think it’s a challenge. A lot of colleagues that say “Oh, I never get the programmer time or the resources to make adaptive music. I propose it, but it never goes anywhere!”. In this regard, I’ve been very fortunate because I’m fairly persistent! I have ways to approach game companies when suggesting adaptive music solutions, not with a paper design. I always approach them with an actual working prototype to show how it’s going to work interactively. The challenge is to sell the idea and to get the confidence of a game developer who has to think that interactivity will improve the game experience. Adaptive music is a genuine barrier for companies and composers. I believe that there is more resistance from game writers than from games companies. The thing is that gaming companies aren’t aware of what’s possible here too. So, a lot of what I do is educating people. The first step in the companies I work with or for is to explain what music in games do and what is its potential. More than an audio director, I’m a sell person trying to convince companies that adaptive music is a good idea!

7) How much are games companies keen on using adaptive music for the titles they release? Is there a difference between indie companies and big firms?

There’s a general lack of awareness of adaptive techniques both in big and small game companies, but I don’t think there’s a lack of interest. The problem is that even audio directors, who are the ones who call the shots on the music side of things, aren’t aware of the potential of adaptive music. Again, there’s a lot of educating to do at the level of the audio directors, producers, and composers too, show them how adaptive music can improve the game experience. Over time, I’ve convinced both big and small companies to go for adaptive music by saying “I can do this. I’ve got it under control, and I will deliver”. AAA companies will tend to be more risk averse; it’s their nature. There might be medium-size companies more open to it. Indie game companies, which usually strive for innovation, will be open to it if they have the right people who are interested in the process. For me, it’s all about the right composer the right audio directors helping to sell the idea to whatever company.

8) Do you see a connection between procedurally-generated games and adaptive music?

Yes, in a huge way! The score has to match both the medium itself but also what the designer is doing in that medium. There are so many non-linear experiences even within games. Take Uncharted, for example. This game is undetermined, but it still has an overall linear storytelling. Each section is unpredictable, but you progress through predetermined chapters. That calls for one kind of score, and I think that Sony does an excellent job with a dynamic music full of layers that come and go and stingers. But that’s different from an open-world scenario where players can start anywhere and do anything. This poses an entirely different set of musical challenges. In a world that is self-generative, I’d like to sit down with the design team and ask “What are the important things here? What’s the player going to find fascinating?”. Do they want to tie music to primary biomes that self-generate or do they want to link it to different characters and races that come into being spontaneous? There’s a lot of fascinating ways you could approach this. The risk is to get lost as a composer too. It’s easy with adaptive scores to do things adaptively just because you can! And that might not be the best solution.

9) What tools do you use to create adaptive music?

It’s evolved, and it’s evolving still. As a foundation tool, I use Wwise. I think I know every in and out of its interactive system! Wwise has all the infrastructure for integrating both MIDI and samples, but also Wav-based stuff and stingers in layers, vertically and horizontally. It also has a real-time DSP. In one word, it’s my primary engine. Now, I’m starting to experiment with Heavy developed by Enzien Audio. This application allows to create Pure Data patches and turn them into Wwise plug-ins. The idea here is that you can create DSP plug-ins and synth plug-ins. I’m also looking at creating dynamic MIDI plug-ins where I can send MIDI messages in, manipulate them in some way and have an output. Once you get this kind of virtual space for the instruments and the mixing, even algorithms can be built on top of it. We’re working in Unity and have developed an audio system in C#. Where Wwise ends, it doesn’t have much logic above its core. We’re using things like the scripting layer in Unity to add more intelligence to the music systems we use. For example, in Peggle Blast the scripting system was what told us what chord we were playing, so it was possible to play the right notes on top of the chord.

10) Can you give an example of a system you had to build or design to integrate adaptive music into a game?

When I was at Sierra, we did some really basic things in code. I hardly would call that building an adaptive system. What we developed was able to play tunes randomly and do some cross-fades. We implemented this because at the time there was no audio engine. Since then, virtually every score I’ve done has at least a program like DirectMusic or Wwise as a foundation for integration. In all of these cases, there were scripts built around these systems.

11) Do you think adaptive music is going to have a role in Virtual Reality experiences?

In a big big way! Adaptive music is going to be the thing especially as deeper emotional experiences and games come out of VR. There will be a greater need for more subtle adaptability. When folks are making experiences that traverse a much broader range of emotional depth, there’s going to call for the music to do much more subtle things. Only adaptive techniques could do that. That’s especially true if you want a tighter integration between visuals and design. Composers who aren’t familiar with adaptive music are going to find themselves in a little bit of trouble with VR!

12) How do you think Artificial Intelligence is going to impact the music making process in the interactive media environment?

You should begin by defining what you mean by AI. A basic algorithm can feel like AI, even if it’s just a randomiser or a variation creator of some kind. I think AI in more sophisticated ways will help the compositional process. As a composer, I picture myself crafting themes and then using the AI to augment variations or even different intensities, knowing that the workload of creating an adaptive score is massive. I see AI-assisted composition where I can curate what the AI comes out with and tweak that. This could be used for harmonic progressions. I can compose a sequence, and then the AI can vary that, making, for example, the progression faster or slower.

13) What do you see as the future of adaptive music? Do you think the paradigm in which people engage with music will fundamentally change?

Mechanically, I see a trajectory that seems pretty obvious. AI is affecting any aspect of technology and music no exception. The use of AI in music making seems inevitable. To me, as a human, I am particularly interested in the question: how do I interact with those AI systems? There’s the world where you press a button, and there’s a producer that comes out with a piece of music, and I can tweak the knobs. That will happen as it is an extension of what’s already going on with the use of music libraries. There’s another – more sophisticated – world where you don’t get full tracks, but different stems that you can stitch together. The next step is an algorithm that does the stitching for you. I see this happening and controversies coming up around this innovation!

Ultimately, I’m interested in keeping humans in the equation by working with these machines to create wonderful musical experiences for the players. Rather than becoming an audio director who curates the different tracks provided by the AI, I want to remain a composer and collaborate with these systems and influence them. So, there’s a broad spectrum here: the AI does everything, you can work with it to a degree, and then you can compose with just a little bit of AI.

The algorithmic composition has to happen in conjunction with the fidelity side. In other words, the best AI in the world will go nowhere if it doesn’t sound satisfying to the producers. That’s why I’m also interested in this balance between live recording and note-based MIDI, and to bring those two things together to arrive at a result that feels very organic and as convincing as a full recording of the London Philharmonic. If AI-based music is going to have any serious aspiration in the industry, it has to sound great. In the late 1990s, it looked like adaptive music was going to take off, but it didn’t, partly because orchestral recording eclipsed it. Every composer on the planet ran towards instrumental recording and abandoned adaptive music in the name of “make it live, make it organic.” It’s necessary to give the industry and these composers a sense of ownership when they work with these algorithms, but also for the end-users and for the producers they should say “Damn! That sounds as good as the movie I just saw!”.

Every composer on the planet ran towards instrumental recording and abandoned adaptive music in the name of “make it live, make it organic.” It’s necessary to give the industry and these composers a sense of ownership when they work with these algorithms, but also for the end-users and for the producers they should say “Damn! That sounds as good as the movie I just saw!”.

![]()

By Valerio Velardo

Read the full interview at Melodrive.com

Recent Comments